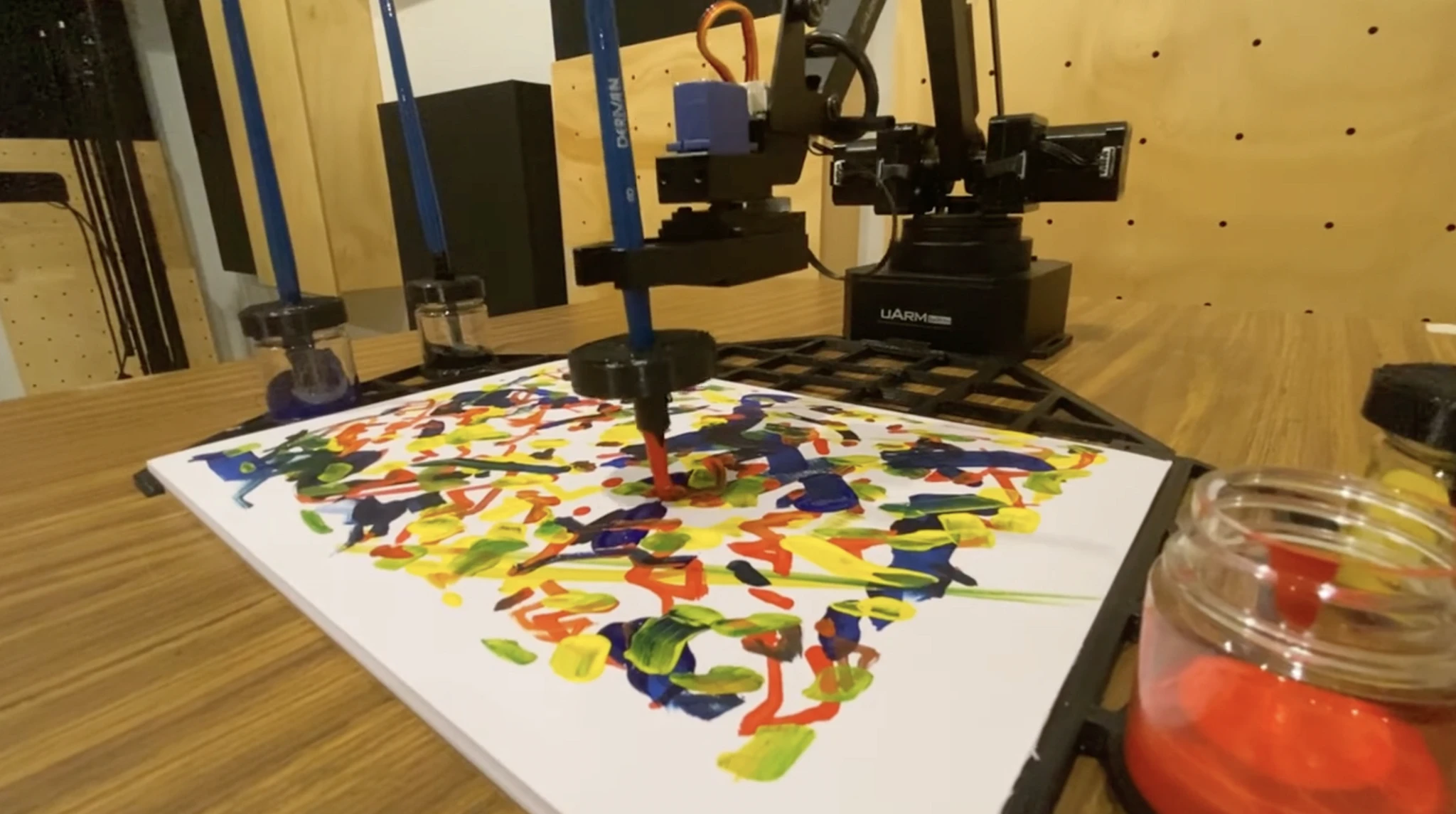

Meet Tessa, our real-time generative artist.

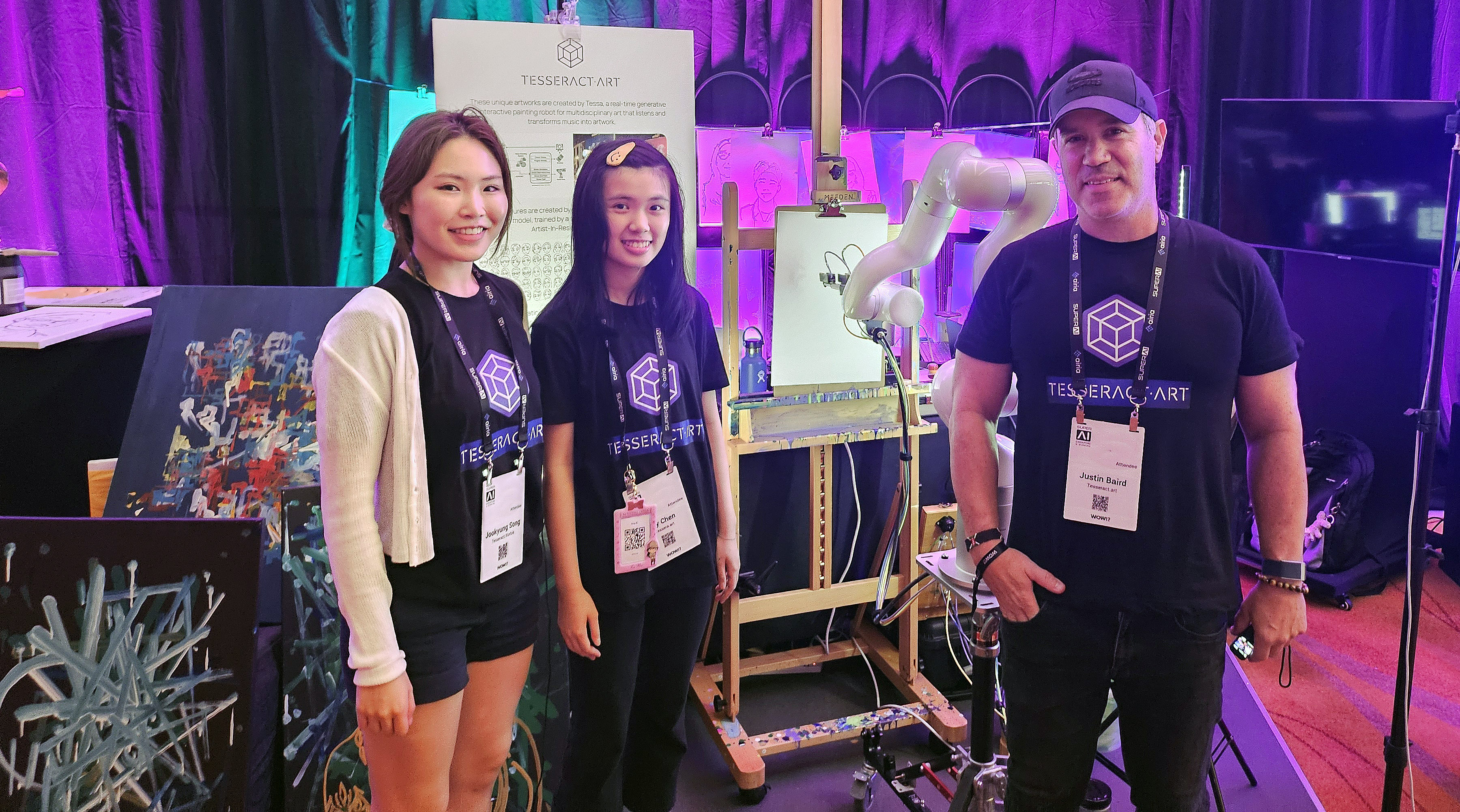

Tesseract.art is a real-time generative AI interactive painting robot for multidisciplinary art creation, developed in collaboration between Justin Baird and Dr Richard Savery.

The intention of our project is to explore and develop creative artificial intelligence as an instrument, leading to the emergence of new art forms and novel relationships between creators and their artistic processes. The integration of AI into creative processes prompts significant questions regarding authorship, control, and the nature of creativity itself. Check out some of our performances and videos and our published papers and projects.

Our approach

Our initial idea stems from the interaction of musicians and technology, and how artificial intelligence could augment and transform human expression of one kind into other physical forms. Our first study considered real time interaction of a robotic agent able to paint new artwork based on the input from a human violinist. This study affirmed future potential for cross-modal interaction between agents and humans.

The first work lead us to a larger scale implementation for improved artwork creation. We wanted to paint with an arm the size of a human arm, with canvas mounted on an easel for acrylic paints. To provide efficient unassisted robotic manipulation, we designed augmented art brushes made for a standard robotic gripper end-effector, to allow variable colour selection.

In a live performance environment with performing musicians, the second version of the system proved effective, resulting in two new artworks derived from two different compositions.

Our system is intended to work in real time, meaning that an artwork is created in the same amount of time as the musical performance. In order to improve the system further, we identified inefficiency in brush selection. With all of the arm movements required to select brushes, the robot was only painting 25% of the musical performance time.

Improving colour selection efficiency led to our next brush invention. The new brush system incorporates electronic logic control, peristaltic pumps, elastomeric tubing, and linear actuators housed in a 3D printed end-effector that enables the system to autonomously select colours. The system was further improved with a larger robotic arm, able to reach across the entire area of a large painting canvas.

For our third study, the system was utilised in a live performance with a larger music ensemble playing loud amplified music. The more intense spectrum of audio and higher number of onsets resulted in a bold acrylic painting showing heavier paint usage and mixing of colours.

In our next study, we have expanded the system to incorporate not only acrylic paints but also watercolours. This exploration also includes further advancements in interaction by incorporating face tracking for head motion and facial expression.

Most recently we have expanded the system to incorporate additional modalities, to enable collaborative paintings with human artists.

Through this work we explore the inherent ambiguity surrounding control in this collaborative environment. We examine the benefits derived from the interaction between human and machine, questioning who holds creative agency and how this interplay shapes the artistic output. By analysing these interactions, we aim to shed light on the evolving role of AI in the arts and its potential to redefine traditional notions of creativity and authorship.

Have a look across all the pages of this website to find out more about our progress, our papers, our videos and performances.