Proof of Concept

Jul 5, 2022

Our project is a multidisciplinary collaboration of creativity and technology - combining robotics, artificial intelligence, music and the visual arts. This has considerable challenges, particularly as music is a temporal art form moving through time, while paintings are a fixed medium - creating an issue between short term art style and the overall generation of an artwork.

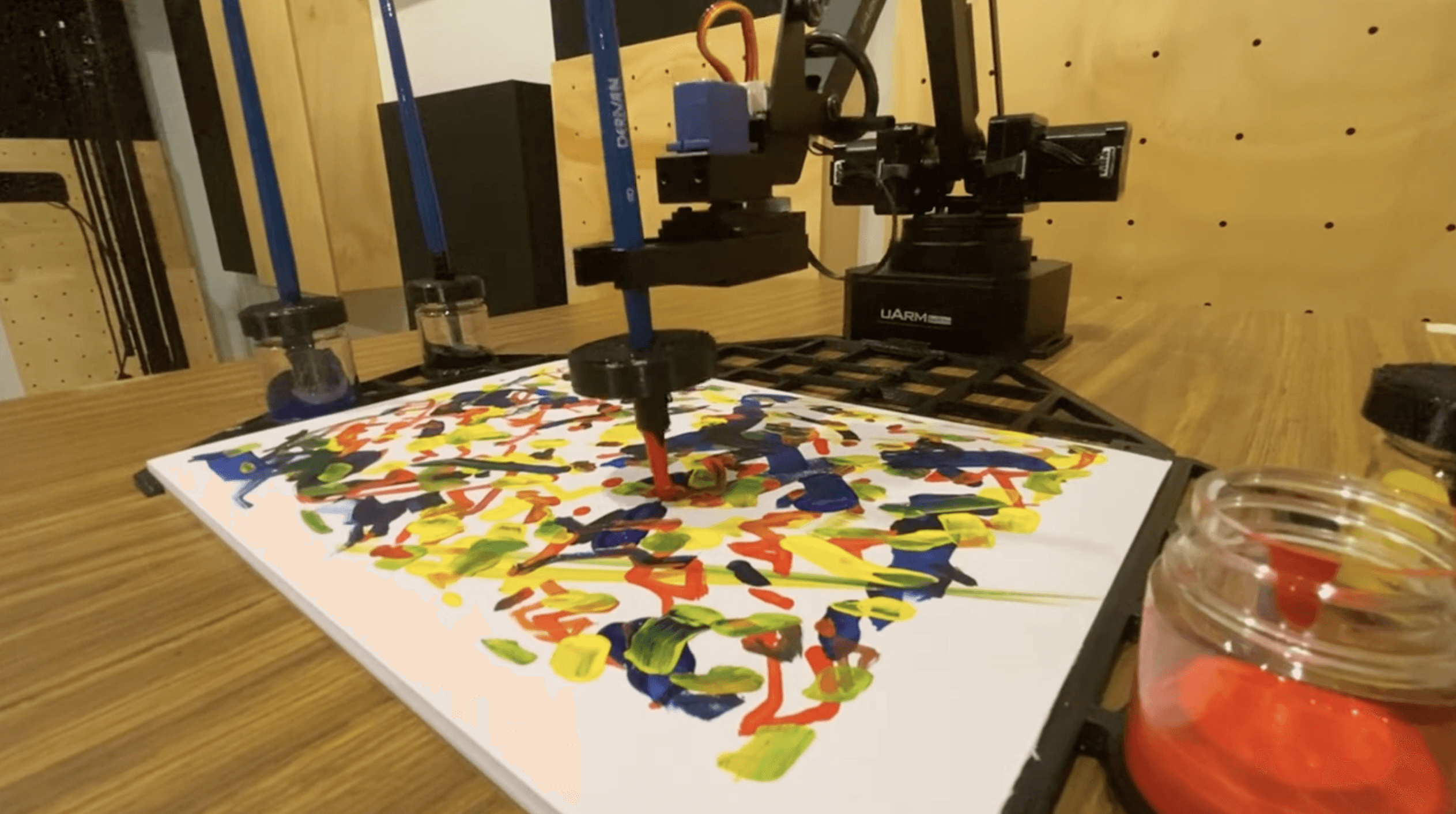

For our first proof of concept, we started with a small desktop project robot that could paint on an A4 size piece of art paper. Richard is in Sydney and I am in Singapore, so we both got the same robot, the same 3D printer and the same hardware setup to be able to collaborate together remotely. We have also received advice and support from the technical and art industries in both Australia and Singapore.

With special thanks to Steven Paddo Patterson, CEO of Derivan Art Supplies and maker of the world famous Matisse acrylic artist paints in Sydney, Australia. We have been working with Paddo to select paints with the right viscosity, brushes that the robot can control, and all of the advice and recommendations we needed to get started with programming brush strokes and mimicking painting techniques.

There were a lot of challenges to get through physically. The robot arm needs to be able to pick up and put down brushes, the paper can’t move around while painting, and something needs to be done to get more paint on the brush! We went through many iterations, figuring out how the robot could best hold the brushes, how the paper and the robot could stay aligned, and how the brushes can be best dipped into paints of different colours.

Through many iterations of design and many hours of 3D printing later, we were ready for a first live performance with a musician. Richard and I did this together in a recording studio located at Macquarie University in North Sydney. Conveniently Richard’s wife Anna is an accomplished improvisational violinist, and she joined us for the collaborative performance.

Our focus for the proof of concept was on a collaborative creation, where the final artwork was directly inspired by Anna’s live performance, and where Anna’s improvisation was inspired by what the robot painted.

The painting canvas has preset coordinates, locked into place through the use of a 3D printed frame. In this way the robot has a set knowledge of the x and y boundaries of the canvas, as well as the positions of each paint brush.

We modified the brushes by attaching 3D printed caps that allowed for the brushes to sit in the paint containers vertically at the desired height to dip into the paint below.

To paint each gesture, the robot moves into position and then modifies the z position of the arm. For this prototype version we used the basic colours of red, blue, yellow and green paints, with the robot able to alter between colours at any time. Additionally, the robot automatically applies more paint to the brush after three brush strokes.

The system is driven by the audio from the violin, with strokes matching the beginning and ending of phrases. The audio first goes into a program that analyses the pitches, the onsets and offsets (to measure note-lengths) and the density of notes per five second intervals. These values are then used to both choose the next stroke and choose when to start a new stroke. The stroke itself is generated in a Python script that then sends the stroke to the robot arm through a serial communication interface.

In our prototype recording, a custom built audio interface was also used to expand the sonic possibilities of the solo acoustic violin. The audio processing was structured to compliment the physical gestures of the robotic arm and to emphasise the use of different colours and the layering of strokes on the canvas.

A dual harmoniser created harmonic expansion, whilst fragmented live sampling added a type of accompaniment to the solo playing, bringing out the playfulness and variety of the multitude of different paint strokes used by the robotic painting arm.

From our perspective, the overall experience felt like a group improvisation, with three distinct performers - the robotic painting arm with the canvas as its instrument, the solo violinist improviser and the audio processing.

Performing with two inanimate partners proved both surprisingly rewarding and somewhat challenging for Anna. After playing through multiple iterations of the work, the timing of the performer’s gestures in response to the robotic arm movements became more natural.

Initially, all the deliberate synchronisations between the robotic arm and the violinist appeared somewhat forced, but after a period of extensive playing, both the music and gestures came together into one cohesive vibrant entity.

Receiving live visual feedback in the form of a painting affected the harmonic and rhythmic pallet of the improvisation. The initial long, reflective strokes and sounds allowed for a slow build up of rhythmic and harmonic complexities. On the other hand, the use of bright, primary colours on the canvas with their vital mixes, led to the choice of consonant harmonies and syncopated double time rhythmic passages, leading to the robot inspiring changes in music, as the painting itself was generated by the music.

While the proof of concept was successful, there are many possible expansions and improvements to pursue.

Currently the robot does not consider timbre variations, inclusion of which would offer a wide range of potential control to the musician. For this performance the restrictions of the robot arm in degrees of freedom, speed and control allowed us to focus on certain areas and not become overwhelmed with possibilities.

In the future, using a higher quality robot arm with better gripper control would allow many more stroke types and subtle variations in the application of the brush itself, which was missing from this version.

We believe the relationship between music, long term form, and painting over time also requires further research. Ultimately we believe this work shows significant future potential for cross-modal interaction between AI agents and human collaborators, and opportunities to incorporate creative robots into the creation of new styles of creativity.